A 5x Page Speed Score Improvement for UpContent Gallery

At UpContent, we have always listened to our customers to help shine light on areas where we can provide the greatest value.

We also actively monitor the environment in which curated articles are used, to proactively bring value - rather than wait for the writing on the wall to become reality. Our most recent experience with this was responding to Google’s “Speed Update”.

This post pulls back the curtains a bit to outline how we approached optimizing our UpContent Gallery solution that brings curated content to our customer’s websites in a way that maximizes SEO benefit and helps drive conversions - all while respecting the rights of the publishers whose content is being presented.

Web developers and technical SEO savants (or aspiring savants), this one’s for you!

Why Does Site Load Speed Matter?

With the majority of today’s internet traffic coming from mobile devices, the ability for the visitor to quickly be presented with your site’s content matters more than ever before.

Even an extra second of loading time can significantly impact a visitor’s likelihood to bounce - diminishing the ROI your site provides.

In 2018, Google incorporated these findings into its ranking algorithm by announcing that its Page Speed Score would be a major factor in how it ranks web pages for searches on mobile.

How Does UpContent Fit In With Marketing Websites?

Our Gallery offering allows users to showcase the fruits of their curation process, without having to routinely access their site’s CMS, and serve as a resource for their visitors by easily bringing the articles our customer vetts to their website in a variety of custom styles and formats - all with the ability to increase page dwell time and drive conversions.

We host our code in AWS S3 and Cloudfront.

Our Gallery is written as a Polymer component that our client adds to their page which then loads the list of articles from our API on page load.

Each article is loaded as a “card” on the site, styled with custom CSS, and upon click the article is opened in a new browser tab - on the original publisher’s site with an optional call-to-action overlay in order to entice lead conversion.

We view this willingness of our customers to place code we provide on their site - especially on those pages that have high SEO value to them - as a high honor and a level of trust we protect dearly.

While the current code that was deployed was far from “broken”, we saw the potential impact client sites’ visitors and SEO performance, and focused on ensuring our code be as performant as possible.

How We Approached Page SPeed Problems

In order to know what to improve, and how successful we were, we needed to identify a quantifiable baseline.

One additional complication we had to consider was that since the we did not control the site loading our code, we could not update existing sites with any change that changed the component code entry point URLs without having our clients update their sites.

Further, if these entry point URLs needed to be updated, it needed to be done in a way that the current configurations would continue to work until those updates were made - knowing that our customers have varying workflows and timetables for when an update of this sort would be possible.

We elected to use total transfer size by per file type (via Chrome dev tools) and the file size (using file system) as the key metrics in assessing whether our efforts improved or degraded performance.

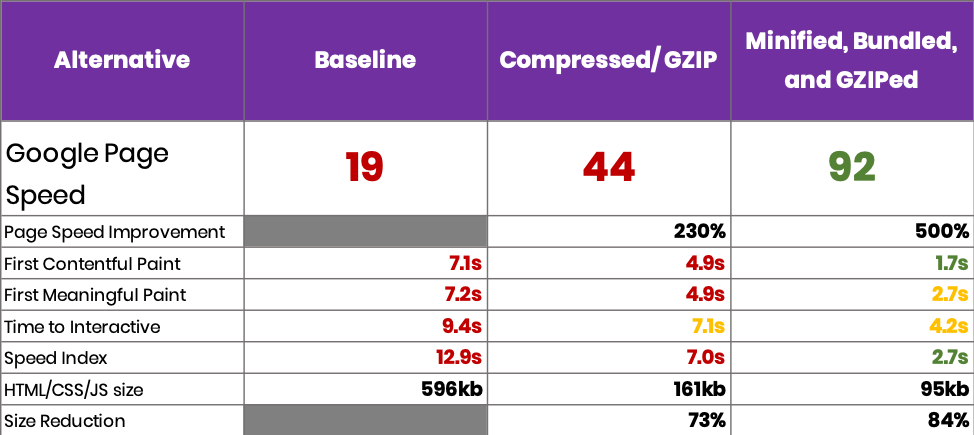

We quickly determined that efforts such as Compression/GZIPing, Minification, and Bundling each gave notable total size improvements.

For each change, we also turned to the Google Page Speed index and load times on the Google Page Speed report - measured against a staging environment that served as our “control”. We measured each meanful set of changes, and recorded the results.

We ended up breaking our project into distinct stages based on how hard it would be to implement, it’s value measured in bundle size, and whether it would require clients to manually update their site.

UpContent Gallery v2

The easiest change by far was file compression/GZIPing.

For an AWS CloudFront site such as ours, this was as simple as turning on the Compression setting.

All major modern browsers will request compression by default, and CloudFront will automatically fall back to uncompressed for browsers that don’t support (💪).

There is also a WebPack plugin that will GZIP/compress content for you if your cloud provider requires your files be pre-compressed.

The next major change group was the minification and bundling. Since this change required a change to the client site for what scripts it was loading, we ended up deciding to do this as a second stage. In production, we stood this up as a separate site in order to allow clients to upgrade as they wanted.

For minification and bundling we chose webpack, mostly because of familiarity with it versus the similar tool, rollup. Either is a great option, but since they are relatively similar, we went with the more familiar choice.

We ended up using the following plugins and loaders:

- babel-loader with babel-preset-env for both html and js

- polymer-webpack-loader for html files

- copy-webpack-plugin to copy some files around and transform one

- We also use uglifycss to minify another CSS file that wasn’t part of the main app and thus was not included in the main bundles

When we did bundle and minify, one issue we ran into was that initially it actually increased our total bundle size (😱).

We found this was being caused by loading a library called moment.js that conditionally loads non-trivially sized locale files depending on the browser’s language and location settings.

Since since Webpack didn’t have this information at bundle time, it was bundling all the locale files for the whole world into the bundle.

To solve this, we loaded moment.js minified, but not bundled, from a third party Content Distribution Network called cdnjs provided for free by CloudFare.

This carried the added benefit that because this was linked to a specific version, and because cdnjs.cloudflare.com is so widely used for common libraries like moment.js, the browser is basically guaranteed to have a cached version that it could load - instantly improving performance beyond what we’d originally planned (😌).

Google Page Speed Score Improved 5x

The results when everything was said and done were quite dramatic. With a 5x score improvement, an 84% size reduction, and an around 75% reduction in various load time metrics, we clearly accomplish our goals - and then some.

The below table showcases where we started, and the related improvements seen through each stage of our efforts (🥴).

Additional Opportunities for Improvement

As a part of the process, we also considered rewriting to reduce the dependencies and the size of the source itself.

To explore this opportunity, we gathered data to determine payoff. We determined that it would be a high-value/high cost initiative and that we could get far more value from much simpler items.

Looking at the breakdown of modules/dependencies in our project, it quickly became apparent that the vast majority of our weight came from polymer, and related dependencies, that we aren’t currently fully utilizing.

By rewriting in either a lightweight framework such as Preact or Ignite, or forgoing a JavaScript framework altogether and rewriting in vanilla JavaScript, we could likely halve our code bundle size.

If we decide to stick with polymer, one thing we would consider is trying to load the polymer and webcomponents.js code from cdnjs in hopes that browser (likely) has a cached copy from cdnjs.

We might also consider looking at moving all scripts to a single entry point script that loads all additional dependencies from CDN js but figuring out how to make this coexist with webpack would be a challenge that we would require additional research.

A Retrospective

As we reflected on the effort, we found that the greatest impact in its success was driven by the objective approach we applied and outlined. Most importantly, we;

- Identified and researched alternatives for solving the challenge prior to writing any code so that we could properly scope the effort in our roadmap,

- Measured “value” in the context of customer benefit,

- Evaluated effort vs. expected value by incrementally improving through each alternative, and

- Pruned when the expected value became less than the effort expended.

Implementation with the customer in mind was also critical and ensured that we could provide additional value without disrupting their ability to realize it - and implement on the timetables that fit best within their organization.

We look forward to hearing your thoughts on our approach and outcomes - and we look forward to continuing bringing you solutions that will ease the burden of content curation and allow you to become a trusted resource for your customers.

.png)